Overview

Use betting market mechanisms to quantify bias in the news media and provide an alternative business model for news media organizations in an effort to curtail fake news.

A demo version is available here.

The problem statement

The reason fake news was so prevalent was because all of the incentives were misaligned. The consumer end was incentivized to become more tribal, polarize more, and seek out what confirms their worldview. Media organizations are desperate enough to go along with this because that generates enough ad revenue to get by. Neither side is incentivized to try to figure out the truth. What analysis lead to this belief? Consider:

First, what are the incentives for the news consumer? Most consumers would probably say they keep up with the news to stay informed. But this is actually not true. People keep up with the news because:

- Gather evidence to support their worldview. This leads to confirmation bias.

- Impress people they admire or think are smart that they, the consumer, are smart. This leads to filter bubbles.

- Signal to people they admire or think are smart that they, the consumer, share common ground. This leads to echo chambers.

That’s one end. The other end is the media companies. They would probably say that their incentive is their civic duty to shine a light on the ills of society. But really, their incentives are:

- Survival. Many papers are simply trying to adapt to a quick-changing landscape. Many media companies are looking for a sustainable business model in this information age because print doesn’t sell and competitors will often give content for free, setting a market price of $0.

- Profit. This might mean subscriptions (but that means paywalls) or it could mean advertisements. Trying to get eyeballs on ads generally means that papers resort to ‘viral’ techniques and in general sub-par journalism.

- Look like they have integrity. It might sound like I’m being cynical, but I don’t mean it in a bad way. The newspapers want to be relevant and trusted so that they can convert that into subscriptions, so they have to earn that trust in some way or at least look like they deserve that trust.

The proposed solution

Ideally, if I could, I would simply pay everyone to read as much of the news as possible equally. Everyone gets paid to read everything — Fox, CNN, Infowars, Huffington Post, all of it. My thought here is that the only thing that would get people over this disincentive to only chase the news they want would be if you paid people to give an equal chance to the news they don’t like. But I’m not remotely wealthy enough to do that.

But the next best thing would be to offer the opportunity to get paid for reading something you potentially disagree with. The closest mechanisim to that is a bet. And in fact, betting on whether you think the news is fake or not is a much better solution, because it forces you to have what Nassim Nicholas Taleb calls “skin in the game.” You will be much more hesitant to call something fake news unless you were sure it was fake news.

But what about the media organizations? How does news consumers betting on their content help a news organization? The best way is in a betting exchange, where the exchange earns a commission on each bet. Betting exchanges help facilitate setting up the bet, maintain fair odds (fairer than a bookie at least), and if ran with the intent to supply news companies with a commission, could eliminate this clickbait, ad-driven business model that’s destroying the idea of truth.

Well, supposing you buy this logic, the question now is how do we do this? Imagine if one receives anonymized, randomized articles (without giveaways to the channel, author, etc) and is forced at the end to determine whether it is Democratic or Republican. Or sponsored content or genuine reporting. Or even whether the article is from a joke news site or not.

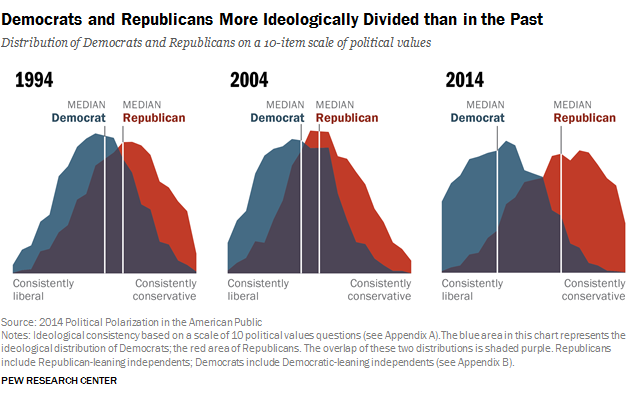

But how do you know if you are correct? Everyone will dispute the truth of an event or thought piece. This is true, but it is much harder to dispute the political leanings of a publication on a relative scale to other publications. I think most will agree that Fox News is nowhere near as left as Antifa News. It is about where the article falls on a relative scale compared to its peers, so to speak. Sometimes I’ve heard this called the Overton window (its not what it really is, but its still interesting.)

Other organizations, such as allsides.com, already have such relative ratings. They in fact use a similar method as one proposed, of having evaluators with stated biases read anonymized articles to provide and aggregate ratings on sources. These ratings are a good place to start with this system design and a voting mechanism can be introduced later. Incidentally this would operate closesly on Robin Hanson's mantra "We vote on values, we bet on beliefs."

With this system sketched out, a demo version was developed here: rashomonnews.com. This demo was written in Python using Django, with a PostgreSQL database, Redis store, and minimal frontend styling using Bootstrap. A large part of this project was to learn the fundamentals of web development and design.

Analysis

The demo, while far from fully featured, was beta-tested (more accurately alpha-tested) during the election year of 2020. While this was limited to only 72 users and is somewhat sparse, the data collected during this time is unique and valuable due to the historic nature of the 2020 election.

As an overview, a total of 72 users tested this system. These users were mostly just friends and family that responded to requests for testing on facebook, instagram, Twitter or other social media. The demo was preloaded with ten fake dollars. Of the $720, $217.20 worth of bets were placed, so there was only 30.2% utilization of these funds. 223 bets total were placed before the platform had effectively no further activity. Interestingly enough, there were more incorrect bets (125) than correctly placed bets (98) but this was somewhat mitigated by the fact that people were somewhat aware they may be placing a wrong bet which we can see in the amount correct ($101.26) vs the amount incorrect ($115.94). We can use the amount as a proxy for confidence, so we can see there was less confidence when a user anticipated they were incorrect and more confidence when they thought they were placing a correct bet. Please see the following pie charts for an overview of how the bets and bet amounts were placed.

.png)

.png)

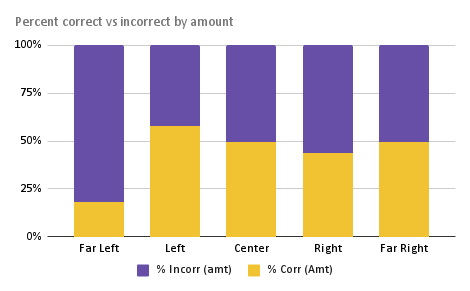

This is interesting, but the data becomes more useful when you group the bets based on the bias of the news sources. In the following two bar charts, the percent correct vs incorrect is plotted for a far left, left, center, right or far right news source. This is done by the number of bets places and by the amount of money placed. When we look at the percent correct or incorrect by amount, we can see most users got around 50% correct when the news source was left, center, right or far right. This performs better than the 20% we would suspect if the bets were randomly selected. However, a larger amount was bet incorrectly on far left, indicating overconfidence in identifiying what was considered a far left source.

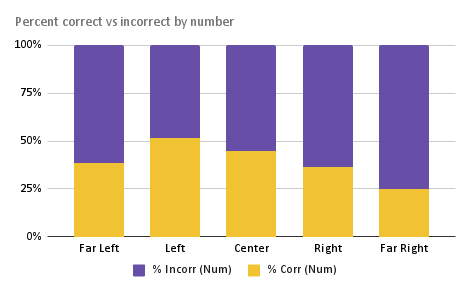

If we look at the chart breaking out percent correct vs incorrect by number of bets placed by source bias, we can see that most users felt most comfortable identifying left leaning sources, correctly identifying left sources 52% of the time. Users correctly identified far right sources the least, only around 25% of the time. Especially interesting is that by count, users could correctly identify far left sources fairly well, but by amount they could not capitalize on this insight, indicating especially low confidence when identifying far left sources.

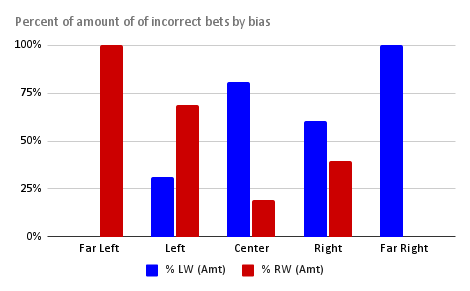

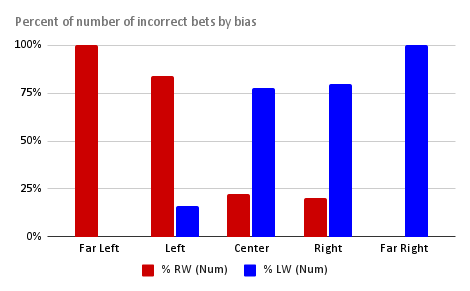

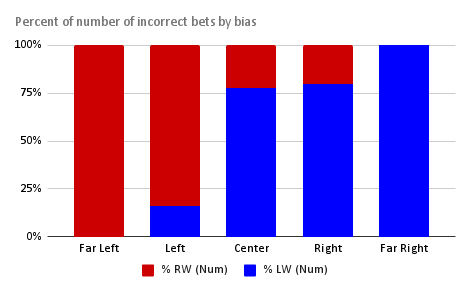

We can also look at the incorrectly placed bets by whether the bet was placed too far to the left or too far to the right. If a user places a bet on a center leaning source and claims that source is left leaning, this implies that the user has a right wing bias because they viewed a source as more left leaning than it is truly. And vice versa, if a user claims a source is right leaning, that implies the user is more left leaning. This was the theory, the data below shows a weak version of this. The breakdown of incorrect amounts of bets by bias is not as revealing, but presented for the sake of completion.

The breakdown of incorrect number of bets by bias is somewhat more revealing. We should note that for the far left and the far right source, the only possible bias is erring towards the right and left, respectively. This is because there is no option further to the left than far left and no option further to the right than far right. With this in mind, one can see in the following charts that left wings sources erred towards the right and right wing and center sources erred towards the left. This implies that users are already polarized towards the extreme ends, but an alternate explanation is that users are trying to place sources closer to the center as much as possible.

Another interesting observation is how suddenly the direction of error switches from majority right leaning errors to left leaning errors. This can more obviously be seen in the below stacked bar graph version of the percent of number of incorrectly placed bets by bias.

Further Plans

At the moment, development on this demo has stalled. However, this idea is extremely flexible and many extensions are possible. Development of this demo into a fully featured platform is possible. This could include its own cryptocurrency, a social media component to share articles after bets have been placed, the possiblity of greater financialization and data analysis, utilities like a bloomberg terminal type of interface to allow users to keep track of bets, and integration into more traditional prediction markets like PredictIt or Polymarket. A bit of a pipe dream, but one idea was to combine Peter Turchin's cliodynamics, this newsbetting idea and prediction markets so that we could create models of the past, present and future that feed into one another.

The idea is extensible even beyond the limited political spectrum of western politics. One could create different axises, such as whether the article they read is sponsored content or not. It's possible to introduce different "games" as well, such as spread betting, betting against the algorithm, betting against individual users, or betting against the house (as it currently is). There may also exist analogies to other financial mechanisms, such as creating some sort of analogue to options markets.

On a more technical note, the demo was developed fairly bare-bones. One major addition for the future is to include a mobile app in addition to the web app. Migrating the frontend to React Native would help with this effort. There are also technical problems and issues, such as a buggy authentication system that doens't have a password reset and the images attached to the articles sometimes give away the news source. A more experienced developer could probably address these issues quickly.

Conclusions

Rashomon can't be claimed to be a total success because it never got quite enough users to warrant a statistically significant collection of data, however there was some valuable data collected. This data was collected during the tumultuous 2020 election and that in itself makes it valuable as a snapshot of that period. Rashomon was valuable in that it was a decently original idea and the execution was a tremendous learning opportunity. While I do wish I could go further with the idea, I am grateful for what I've been able to do with it thus far.